Understanding Direct Memory Access (DMA): How Data Moves Efficiently Between Storage and Memory

Transferring data between Storage and Memory can slow down a computer if the CPU has to manage every step. Direct Memory Access (DMA) is a mechanism that lets a dedicated controller take over this job, freeing up the CPU and making data movement faster and more efficient.

In this article, we’ll explain what DMA is, why it’s important, and how it enables efficient data movement in computer systems.

Note: Terms like MMU and memory controller are mentioned here in a simplified way. For a deeper understanding of these components and their roles, please refer to my detailed articles on Virtual Memory and Memory Controllers.

Why DMA?

Without DMA, the CPU is responsible for every step of the transfer, reading and writing data one byte at a time. This method is known as Programmed I/O.

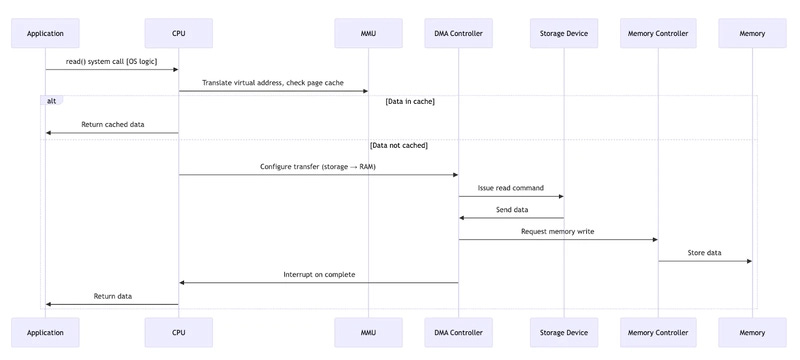

As shown in the diagram, the CPU stays busy throughout the entire process, slowing transfers and preventing it from handling other tasks. By offloading this work to the DMA controller, the system frees up the CPU and achieves faster, more efficient data movement.

How DMA Works

DMA uses a special hardware controller that works on its own, without needing the CPU. During data transfers, it takes control of the memory bus to move data directly between devices and RAM.

The process involves three key phases:

Setup Phase

The CPU configures the DMA controller with three critical pieces of information:

Source Address → where the data is coming from.

For read() operations, the source is typically the storage device (like a disk or SSD).

For write() operations, the source is memory, where the program’s data is prepared.

Destination Address → where the data should go.

For read(), the destination is memory (so programs can use the data).

For write(), the destination is the storage device.

Transfer Size → how many bytes to move.

The CPU programs these details into the DMA controller and then steps aside.

Transfer Phase

The DMA controller handles the data transfer without CPU involvement.

Completion Phase

The DMA controller sends an interrupt to notify the CPU when the transfer completes.

Overall Read Flow — read() system call

The application issues a read request via a system call.

The CPU uses the MMU to translate the virtual address of the page cache, which resides in the kernel’s memory space.

The kernel checks the page cache in memory to see if the data is already available.

If the data is cached, it is immediately returned to the application.

If not cached, the CPU configures the DMA controller to transfer data from the storage device.

The DMA commands the storage device to send data.

The DMA writes the incoming data into memory through the memory controller.

When the transfer completes, the DMA interrupts the CPU.

The CPU then returns the data to the application.

Overall Write Flow — write() system call

The application sends data to be written via a system call.

The CPU uses the MMU to translate the virtual address of the page cache, which lies in the kernel’s memory space.

The data is first buffered in the page cache in memory by the kernel.

The CPU sets up the DMA controller to move the buffered data from memory to the storage device.

The DMA reads the buffered data from memory through the memory controller.

The DMA streams the data to the storage device.

After the write finishes, the DMA sends an interrupt to the CPU.

The CPU acknowledges the write completion back to the application.

If you found this explanation helpful and want to stay updated with more clear, detailed guides, follow me! I regularly share deep dives and easy-to-understand articles on a variety of tech topics.